Evolution of Battlefield Robotics: Emergence of Autonomous Warriors

The field of battlefield robotics has seen a significant transformation over recent decades, moving from simple remote-controlled devices to sophisticated autonomous systems that can make independent combat decisions. This evolution has been driven by advances in artificial intelligence (AI), machine learning, robotics, and sensor technology, heralding the arrival of true autonomous warriors on the battlefield.

In the earlier stages of battlefield robotics, the primary focus was on remotely operated vehicles (ROVs), which were essentially sophisticated remote-controlled machines. Operators, often far from the front lines, controlled these robots to perform tasks such as bomb disposal, reconnaissance, and surveillance. However, their capabilities were limited by the need for constant human control and the vulnerabilities associated with communication delays and signal interferences.

As technology progressed, the concept of semi-autonomous systems gained traction. These systems, enhanced with better sensors and rudimentary AI, could perform some tasks independently but still required a human to supervise and make critical decisions. A notable leap in this direction was the development of drones that could autonomously execute flight patterns but needed human input for strike decisions.

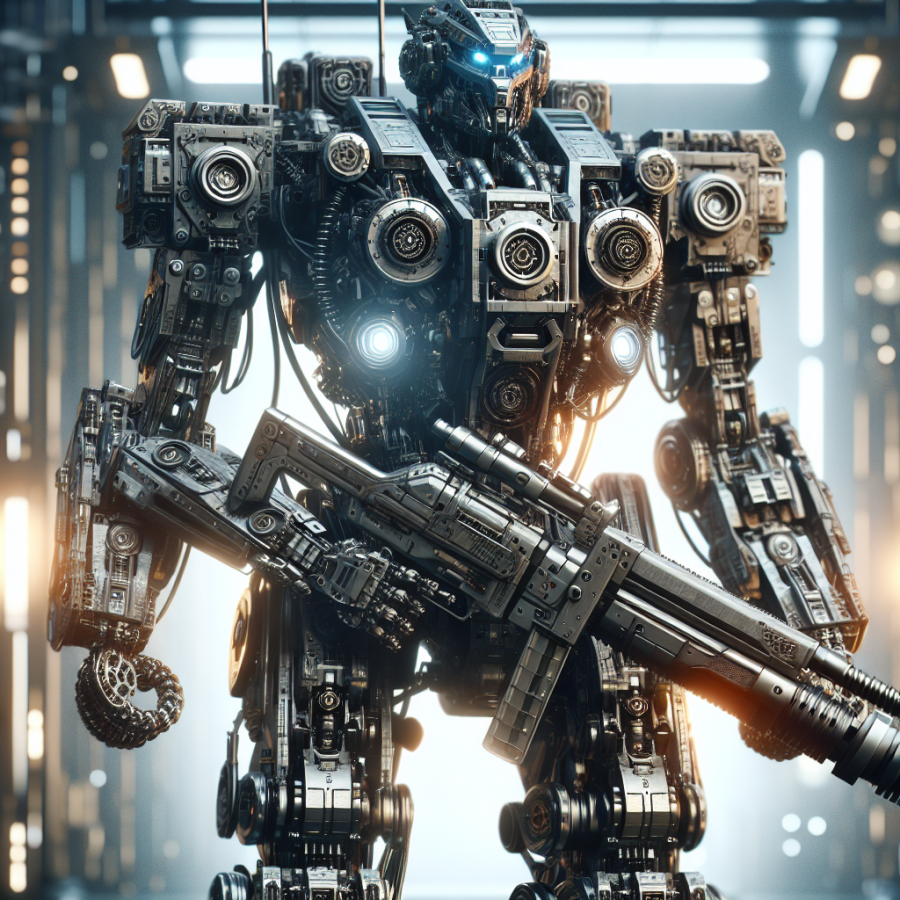

The latest chapter in the evolution of battlefield robotics is the advent of fully autonomous systems—robots that can operate independently in complex and dynamic environments. AI has reached a point where algorithms can process vast amounts of data from various sensors, make quick decisions, and learn from experience, much like a human soldier. These autonomous systems can patrol areas, identify threats, and even engage targets without human intervention, albeit with the constraint of programmed rules of engagement to comply with ethical and legal standards.

The emergence of these autonomous warriors presents numerous advantages over their human counterparts. They can operate in hazardous environments without risking human lives, maintain operational capabilities over extended periods without fatigue, and process information from multiple sources far faster than a human could. This can increase mission effectiveness while reducing casualties among military personnel.

However, this evolution also raises several concerns, including ethical questions regarding the delegation of the lethal decision-making process to machines. Additionally, the risk of malfunction or hacking poses significant operational and strategic risks. There is also an ongoing debate about the potential for an arms race in autonomous weapon systems, with many advocating for international agreements to regulate their deployment and use.

Moreover, the integration of these autonomous warriors into conventional units underscores the need for a new doctrine and training that addresses the challenges of human-robot interaction on the battlefield. Ensuring that combat robots can effectively complement human actions and that soldiers can trust and understand their automated counterparts is crucial for the success of future military operations.

Read also:

Dive Into the Aquatic Arena: The Thrill of Blitzball

Strategic Advancements and Ethical Implications: Understanding Combat Robots' Impact on Modern Warfare

As autonomous combat robots increasingly become a part of military arsenals across the globe, their strategic advancements are reshaping the tactics and strategies of modern warfare. This transformative shift is not just a matter of hardware; it involves the intricate intertwining of artificial intelligence, machine learning, and robotics, leading to increased efficiency and new forms of conflict management.

One key strategic advancement lies in the realm of decision speed. Combat robots, equipped with advanced sensors and computational power, can process information and react to threats far quicker than human soldiers. This can provide a significant edge during missions where split-second decisions are crucial. For instance, autonomous drones can perform reconnaissance over dangerous territory, identify targets, and engage with an enemy all without putting human lives directly at risk.

Moreover, the endurance of combat robots presents a strategic advantage. Unlike human soldiers who require rest, robots can operate continuously, leading to sustained operations that can wear down an opponent's defenses and resources. This capability is particularly potent in long-duration missions, where human personnel might face fatigue-related challenges.

This brings us to the daunting ethical implications, as the advent of autonomous systems in warfare raises fundamental questions regarding responsibility and accountability. Deciding on targets and taking lives without direct human oversight is fraught with moral considerations. Current international humanitarian laws are predicated on the assumption that humans are making the decisions, meaning that the implementation of combat robots could necessitate a radical overhaul of existing legal frameworks to ensure compliance with ethical standards.

There is also the challenge of "machine morality." How can we ensure that autonomous systems uphold the same ethical and legal standards as human combatants? As machines lack human judgment and empathy, there is an inherent risk of collateral damage or unintended consequences if these systems misinterpret data or if their algorithms fail in unpredictable ways.

In addition, the proliferation of combat robots could lead to an arms race, incentivizing nations to prioritize the development of more advanced autonomous weapon systems over diplomatic conflict resolution methods. This potential shift from human troops to robotic armies could make the decision to engage in conflict easier, as political leaders might be more willing to commit machines rather than humans to battle.

Lastly, there is the significant concern that autonomous combat robots could fall into the hands of non-state actors or rogue entities. The prospect of such powerful technologies being used by those without adherence to international norms and laws adds a layer of urgency to the debate over the control and regulation of autonomous weapon systems.